Multi-Object Manipulation

via Object-Centric Neural Scattering Functions

Stephen Tian1* , Yancheng Cai1*, Hong-Xing “Koven” Yu1, Sergey Zakharov2, Katherine Liu2, Adrien Gaidon2, Yunzhu Li1, Jiajun Wu1

* indicates equal contribution.

1 Stanford University 2 Toyota Research Institute

CVPR 2023

Abstract

Learned visual dynamics models have proven effective for robotic manipulation tasks. Yet, it remains unclear how best to represent scenes involving multi-object interactions. Current methods decompose a scene into discrete objects, but they struggle with precise modeling and manipulation amid challenging lighting conditions as they only encode appearance tied with specific illuminations. In this work, we propose using object-centric neural scattering functions (OSFs) as object representations in a model-predictive control framework. OSFs model per-object light transport, enabling compositional scene re-rendering under object rearrangement and varying lighting conditions. By combining this approach with inverse parameter estimation and graph-based neural dynamics models, we demonstrate improved model-predictive control performance and generalization in compositional multi-object environments, even in previously unseen scenarios and harsh lighting conditions.

Inverse Parameter Estimation

Given Object-Centric Neural Scattering Functions (OSFs) for objects in the scene and object masks, we use sampling-based optimizers to estimate object and light poses, computing photometric losses between compositionally rendered images and observations.

OSF object renders

Light pose optimization

The image on the left shows the observation from the environment. The middle column shows the rendered scene as the position of the light (shown in green on the right column) is optimized. Here we assume the object pose is known.

Object pose optimization

Joint optimization

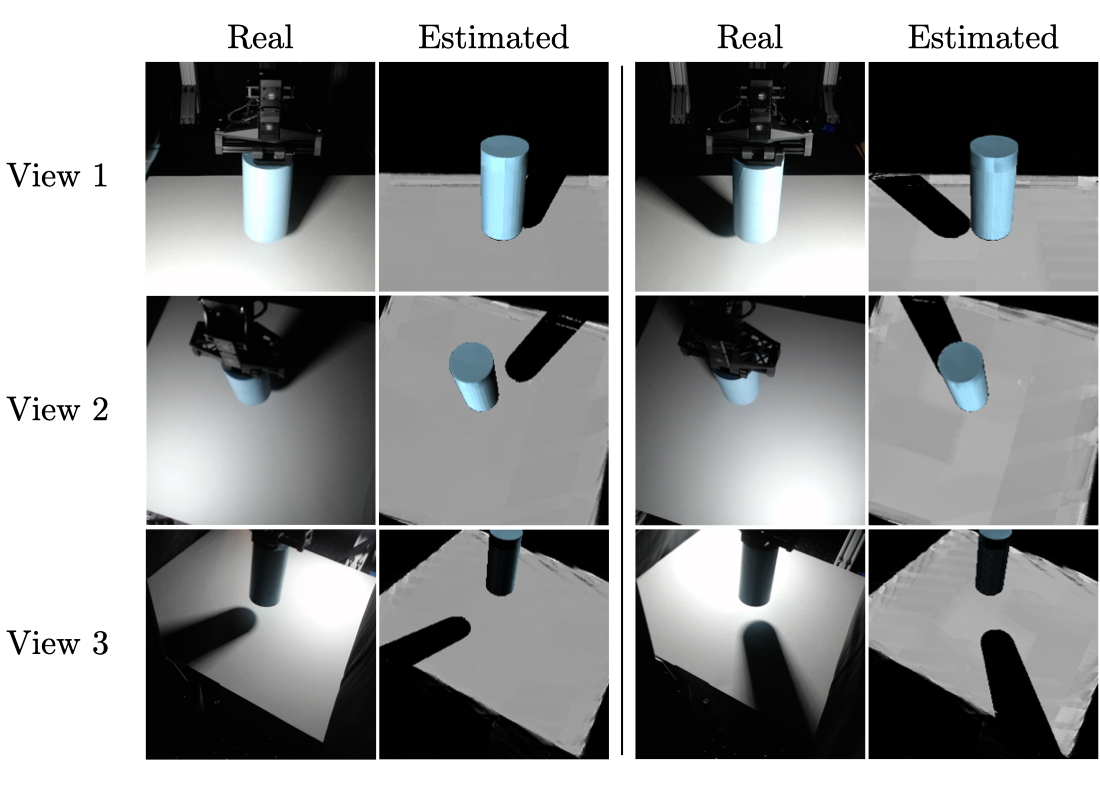

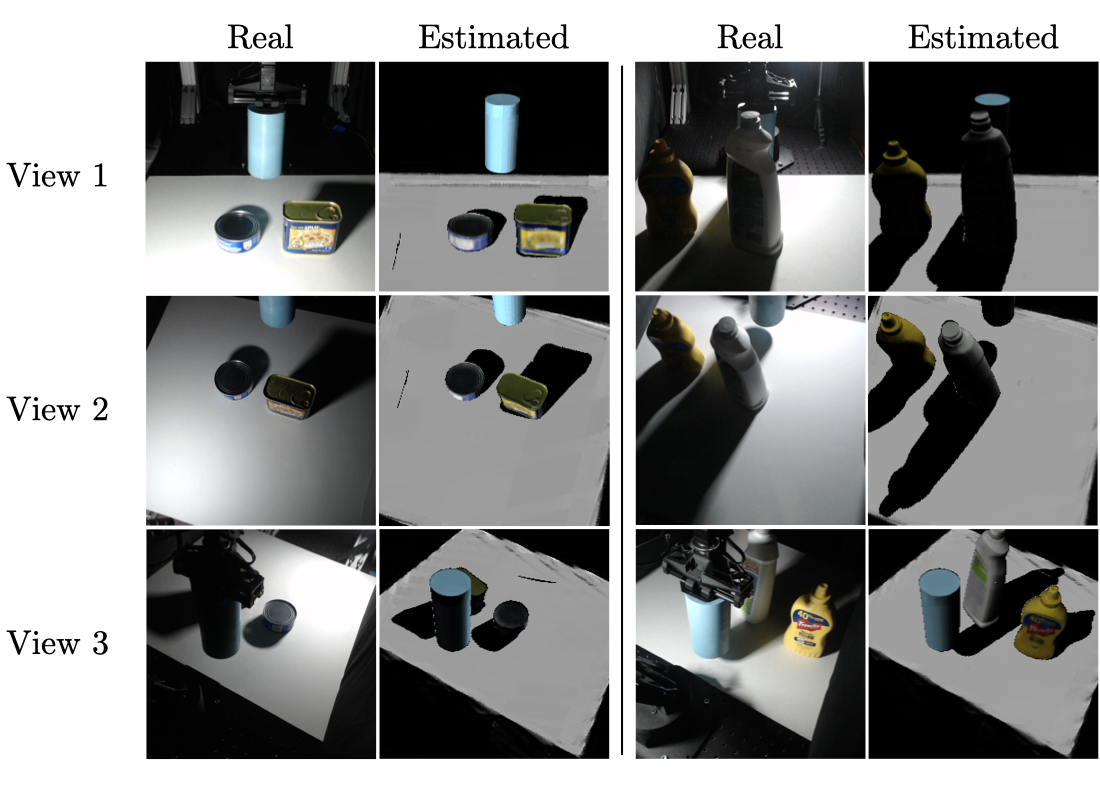

Parameter estimation on real images

Model-Predictive Control

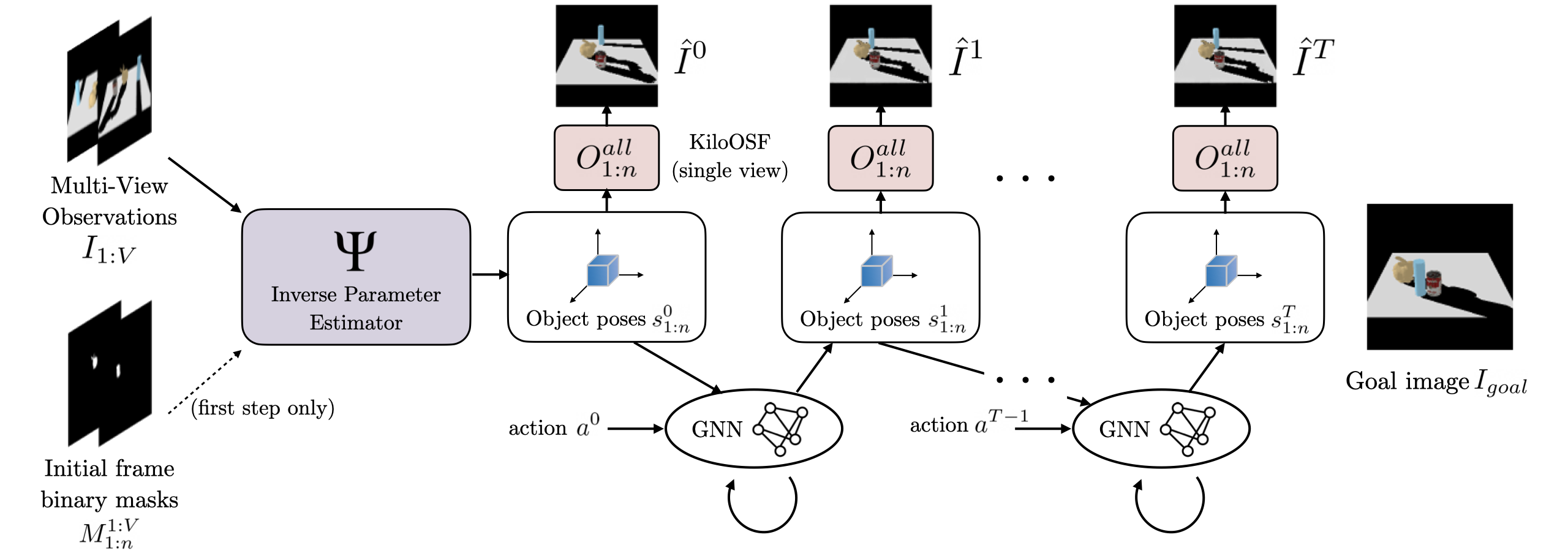

We learn a graph-based dynamics model to predict how a robot’s action (represented by the blue cylinder) affects the scene. We use this in combination with our inverse parameter estimator to perform model-predictive control, as shown below.

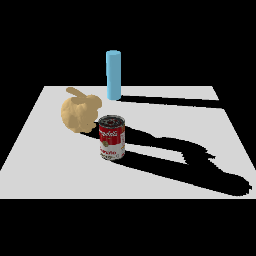

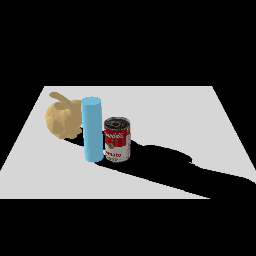

Here we visualize the planning process to reach the goal image shown on the right. The orange silhouettes show the dynamics predictions for the best action sequence found at each step.

Video Overview

The first minute provides a brief, high-level summary. The remaining video discusses each component in more detail.

BibTeX

@inproceedings{tian2023multi,

title={Multi-Object Manipulation via Object-Centric Neural Scattering Functions},

author={Stephen Tian and Yancheng Cai and Hong-Xing Yu and Sergey Zakharov and Katherine Liu and Adrien Gaidon and Yunzhu Li and Jiajun Wu},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2023}

}